|

|

| (71 intermediate revisions by 8 users not shown) |

| Line 1: |

Line 1: |

| − | ==Ruby==

| + | == High level components of Ruby == |

| − | === High level components of Ruby ===

| |

| | | | |

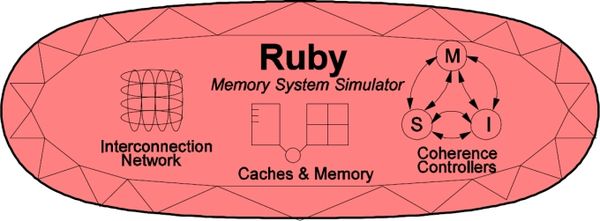

| − | Ruby implements a detailed simulation model for the memory subsystem. It models inclusive/exclusive cache hierarchies with various replacement policies, coherence protocol implementations, interconnection networks, DMA and memory controllers, various sequencers that initiate memory requests and handle responses. The models are modular, flexible and highly configurable. Three key aspects of these models are: | + | Ruby implements a detailed simulation model for the memory subsystem. It models inclusive/exclusive cache hierarchies with various [[Replacement_policy|replacement policies]], coherence protocol implementations, interconnection networks, DMA and memory controllers, various sequencers that initiate memory requests and handle responses. The models are modular, flexible and highly configurable. Three key aspects of these models are: |

| | | | |

| | # Separation of concerns -- for example, the coherence protocol specifications are separate from the replacement policies and cache index mapping, the network topology is specified separately from the implementation. | | # Separation of concerns -- for example, the coherence protocol specifications are separate from the replacement policies and cache index mapping, the network topology is specified separately from the implementation. |

| Line 11: |

Line 10: |

| | [[File:ruby_overview.jpg|600px|center]] | | [[File:ruby_overview.jpg|600px|center]] |

| | | | |

| − | ==== SLICC + Coherence protocols: ====

| + | === SLICC + Coherence protocols: === |

| − | Need to say what is SLICC and whats its purpose.

| + | |

| − | Talk about high level strcture of a typical coherence protocol file, that SLICC uses to generate code.

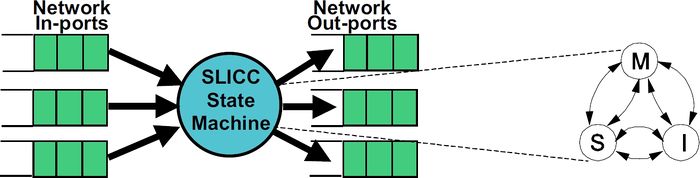

| + | '''''[[SLICC]]''''' stands for ''Specification Language for Implementing Cache Coherence''. It is a domain specific language that is used for specifying cache coherence protocols. In essence, a cache coherence protocol behaves like a state machine. SLICC is used for specifying the behavior of the state machine. Since the aim is to model the hardware as close as possible, SLICC imposes constraints on the state machines that can be specified. For example, SLICC can impose restrictions on the number of transitions that can take place in a single cycle. Apart from protocol specification, SLICC also combines together some of the components in the memory model. As can be seen in the following picture, the state machine takes its input from the input ports of the inter-connection network and queues the output at the output ports of the network, thus tying together the cache / memory controllers with the inter-connection network itself. |

| − | A simple example structure from protocol like MI_example can help here.

| |

| − | | |

| − | SLICC stands for ''Specification Language for Implementing Cache Coherence''. It is a domain specific language that is used for specifying cache coherence protocols. In essence, a cache coherence protocol behaves like a state machine. SLICC is used for specifying the behavior of the state machine. Since the aim is to model the hardware as close as possible, SLICC imposes constraints on the state machines that can be specified. For example, SLICC can impose restrictions on the number of transitions that can take place in a single cycle. Apart from protocol specification, SLICC also combines together some of the components in the memory model. As can be seen in the following picture, the state machine takes its input from the input ports of the inter-connection network and queues the output at the output ports of the network, thus tying together the cache / memory controllers with the inter-connection network itself. | |

| | | | |

| | [[File:slicc_overview.jpg|700px|center]] | | [[File:slicc_overview.jpg|700px|center]] |

| | | | |

| − | The language it self is syntactically similar to C/C++. The SLICC compiler generates C++ code for the state machine. Additionally it can also generate HTML documentation for the protocol.

| + | The following cache coherence protocols are supported: |

| − | | |

| − | ''Nilay will do it''

| |

| − | | |

| − | ==== Protocol independent memory components ====

| |

| − | # Cache Memory

| |

| − | # Replacement Policies

| |

| − | # Memory Controller

| |

| − | | |

| − | ''Arka will do it''

| |

| − | | |

| − | ==== Interconnection Network ====

| |

| − | | |

| − | The interconnection network connects the various components of the memory hierarchy (cache, memory, dma controllers) together. There are 3 key parts to this:

| |

| − |

| |

| − | # '''Topology specification''': These are specified with python files that describe the topology (mesh/crossbar/ etc.), link latencies and link bandwidth. The following picture shows a high level view of several well-known network topologies, all of which can be easily specified.

| |

| − | [[File:topology_overview.jpg|1200px|center]]

| |

| − | # '''Cycle-accurate network model''': [http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=4919636 Garnet] is a cycle accurate, pipelined, network model that builds and simulates the specified topology. It simulates the router pipeline and movement of flits across the network subject to the routing algorithm, latency and bandwidth constraints.

| |

| − | # '''Network Power model''': The [http://www.princeton.edu/~peh/orion.html Orion] power model is used to keep track of router and link activity in the network. It calculates both router static power and link and router dynamic power as flits move through the network.

| |

| − | | |

| − | More details about the network model implementation are described [[#Interconnection_Network_2|here]]

| |

| − | | |

| − | === Implementation of Ruby ===

| |

| − | | |

| − | ==== Directory Structure ====

| |

| − | | |

| − | * '''src/mem/'''

| |

| − | ** '''protocols''': SLICC specification for coherence protocols

| |

| − | ** '''slicc''': implementation for SLICC parser and code generator

| |

| − | ** '''ruby'''

| |

| − | *** '''buffers''': implementation for message buffers that are used for exchanging information between the cache, directory, memory controllers and the interconnect

| |

| − | *** '''common''': frequently used data structures, e.g. Address (with bit-manipulation methods), histogram, data block, basic types (int32, uint64, etc.)

| |

| − | *** '''eventqueue''': Ruby’s event queue API for scheduling events on the gem5 event queue

| |

| − | *** '''filters''': various Bloom filters (stale code from GEMS)

| |

| − | *** '''network''': Interconnect implementation, sample topology specification, network power calculations

| |

| − | *** '''profiler''': Profiling for cache events, memory controller events

| |

| − | *** '''recorder''': Cache warmup and access trace recording

| |

| − | *** '''slicc_interface''': Message data structure, various mappings (e.g. address to directory node), utility functions (e.g. conversion between address & int, convert address to cache line address)

| |

| − | *** '''system''': Protocol independent memory components – CacheMemory, DirectoryMemory, Sequencer, RubyPort

| |

| − | | |

| − | ==== SLICC ====

| |

| − | Explain functionality/ capability of SLICC

| |

| − | Talk about

| |

| − | AST, Symbols, Parser and code generation in some details but NO need to cover every file and/or functions.

| |

| − | Few examples should suffice.

| |

| − | | |

| − | ''Nilay will do it''

| |

| − | | |

| − | SLICC is a domain specific language for specifying cache coherence protocols. The SLICC compiler generates C++ code for different controllers, which can work in tandem with other parts of Ruby. The compiler also generates an HTML specification of the protocol.

| |

| − | | |

| − | ===== Input To the Compiler =====

| |

| − | | |

| − | The SLICC compiler takes as input files that specify the controllers involved in the protocol. The .slicc file specifies the different files used by the particular protocol under consideration. For example, if trying to specify the MI protocol using SLICC, then we may ues MI.slicc as the file that specifies all the files necessary for the protocol. The files necessary for specifying a protocol include the definitions of the state machines for different controllers, and of the network messages that are passed on between these controllers.

| |

| − | | |

| − | The files have a syntax similar to that of C++. The compiler, written using [http://www.dabeaz.com/ply/ PLY (Python Lex-Yacc)], parses these files to create an Abstract Syntax Tree (AST). The AST is then traversed to build some of the internal data structures. Finally the compiler outputs the C++ code by traversing the tree again. The AST represents the hierarchy of different structures present with in a state machine. We describe these structures next.

| |

| − | | |

| − | ===== Protocol State Machines =====

| |

| − | In this section we take a closer look at what goes in to a file containing specification of a state machine. Each state machine is described using SLICC's '''machine''' datatype. Each machine has several different types of members. Machines for cache and directory controllers include cache memory and directory memory data members respectively.

| |

| − | | |

| − | | |

| − | * In order to let the controller receive messages from different entities in the system, the machine has a number of '''Message Buffers'''. These act as input and output ports for the machine. Here is an example specifying the output ports.

| |

| − | | |

| − | MessageBuffer requestFromCache, network="To", virtual_network="2", ordered="true";

| |

| − | MessageBuffer responseFromCache, network="To", virtual_network="4", ordered="true";

| |

| − | | |

| − | Note that Message Buffers have some attributes that need to be specified correctly. Another example, this time for specifying the input ports.

| |

| − | | |

| − | MessageBuffer forwardToCache, network="From", virtual_network="3", ordered="true";

| |

| − | MessageBuffer responseToCache, network="From", virtual_network="4", ordered="true";

| |

| − | | |

| − | | |

| − | | |

| − | * Next the machine includes a declaration of the '''states''' that machine can possibly reach. In cache coherence protocol, states can be of two types -- stable and transient. A cache block is said to be in a stable state if in the absence of any activity (in coming request for the block from another controller, for example), the cache block would remain in that state for ever. Transient states are required for transitioning between stable states. They are needed when ever the transition between two stable states can not be done in an atomic fashion. Next is an example that shows how states are declared. SLICC has a keyword '''state_declaration''' that has to be used for declaring states.

| |

| − | | |

| − | state_declaration(State, desc="Cache states") {

| |

| − | I, AccessPermission:Invalid, desc="Not Present/Invalid";

| |

| − | II, AccessPermission:Busy, desc="Not Present/Invalid, issued PUT";

| |

| − | M, AccessPermission:Read_Write, desc="Modified";

| |

| − | MI, AccessPermission:Busy, desc="Modified, issued PUT";

| |

| − | MII, AccessPermission:Busy, desc="Modified, issued PUTX, received nack";

| |

| − | IS, AccessPermission:Busy, desc="Issued request for LOAD/IFETCH";

| |

| − | IM, AccessPermission:Busy, desc="Issued request for STORE/ATOMIC";

| |

| − | }

| |

| − | | |

| − | The states I and M are the only stable states in this example. Again note that certain attributes have to be specified with the states.

| |

| − | | |

| − | ==== Protocols ====

| |

| − | Need to talk about each protocol being shipped. Need to talk about protocol specific configuration parameters.

| |

| − | NO need to explain every action or every state/events, but need to give overall idea and how it works

| |

| − | and assumptions (if any).

| |

| − | | |

| − | ===== Common Notations and Data Structures =====

| |

| − | | |

| − | ====== '''Coherence Messages''' ======

| |

| − | | |

| − | These are described in the <''protocol-name''>-msg.sm file for each protocol.

| |

| − | | |

| − | {| border="1" cellpadding="10" class="wikitable"

| |

| − | ! Message !! Description

| |

| − | |-

| |

| − | | '''ACK/NACK''' || positive/negative acknowledgement for requests that wait for the direction of resolution before deciding on the next action. Examples are writeback requests, exclusive requests.

| |

| − | |-

| |

| − | | '''GETS''' || request for shared permissions to satisfy a CPU's load or IFetch.

| |

| − | |-

| |

| − | | '''GETX''' || request for exclusive access.

| |

| − | |-

| |

| − | | '''INV''' || invalidation request. This can be triggered by the coherence protocol itself, or by the next cache level/directory to enforce inclusion or to trigger a writeback for a DMA access so that the latest copy of data is obtained.

| |

| − | |-

| |

| − | | '''PUTX''' || request for writeback of cache block. Some protocols (e.g. MOESI_CMP_directory) may use this only for writeback requests of exclusive data.

| |

| − | |-

| |

| − | | '''PUTS''' || request for writeback of cache block in shared state.

| |

| − | |-

| |

| − | | '''PUTO''' || request for writeback of cache block in owned state.

| |

| − | |-

| |

| − | | '''PUTO_Sharers''' || request for writeback of cache block in owned state but other sharers of the block exist.

| |

| − | |-

| |

| − | | '''UNBLOCK''' || message to unblock next cache level/directory for blocking protocols.

| |

| − | |}

| |

| − | | |

| − | ====== '''AccessPermissions''' ======

| |

| − | | |

| − | These are associated with each cache block and determine what operations are permitted on that block. It is closely correlated with coherence protocol states.

| |

| − | | |

| − | {| border="1" cellpadding="10" class="wikitable"

| |

| − | ! Permissions !! Description

| |

| − | |-

| |

| − | | '''Invalid''' || The cache block is invalid. The block must first be obtained (from elsewhere in the memory hierarchy) before loads/stores can be performed. No action on invalidates (except maybe sending an ACK). No action on replacements. The associated coherence protocol states are I or NP and are stable states in every protocol.

| |

| − | |-

| |

| − | | '''Busy''' || TODO

| |

| − | |-

| |

| − | | '''Read_Only''' || Only operations permitted are loads, writebacks, invalidates. Stores cannot be performed before transitioning to some other state.

| |

| − | |-

| |

| − | | '''Read_Write''' || Loads, stores, writebacks, invalidations are allowed. Usually indicates that the block is dirty.

| |

| − | |}

| |

| − | | |

| − | ====== Data Structures ======

| |

| − | * '''Message Buffers''':TODO

| |

| − | * '''TBE Table''': TODO

| |

| − | * '''Timer Table''': This maintains a map of address-based timers. For each target address, a timeout value can be associated and added to the Timer table. This data structure is used, for example, by the L1 cache controller implementation of the MOESI_CMP_directory protocol to trigger separate timeouts for cache blocks. Internally, the Timer Table uses the event queue to schedule the timeouts. The TimerTable supports a polling-based interface, '''isReady()''' to check if a timeout has occurred. Timeouts on addresses can be set using the '''set()''' method and removed using the '''unset()''' method.

| |

| − | :: '''Related Files''':

| |

| − | :::: src/mem/ruby/system/TimerTable.hh: Declares the TimerTable class

| |

| − | :::: src/mem/ruby/system/TimerTable.cc: Implementation of the methods of the TimerTable class, that deals with setting addresses & timeouts, scheduling events using the event queue.

| |

| − | | |

| − | ====== Coherence controller FSM Diagrams ======

| |

| − | * The Finite State Machines show only the stable states

| |

| − | * Transitions are annotated using the notation "'''Event list'''" or "'''Event list : Action list'''" or "'''Event list : Action list : Event list'''". For example, Store : GETX indicates that on a Store event, a GETX message was sent whereas GETX : Mem Read indicates that on receiving a GETX message, a memory read request was sent. Only the main triggers and actions are listed.

| |

| − | * In the diagrams, the transition labels are associated with the arc that cuts across the transition label or the closest arc.

| |

| − | | |

| − | ===== MI example =====

| |

| − | | |

| − | ====== Protocol Overview ======

| |

| − | | |

| − | * This is a simple cache coherence protocol that is used to illustrate protocol specification using SLICC.

| |

| − | * This protocol assumes a 1-level cache hierarchy. The cache is private to each node. The caches are kept coherent by a directory controller. Since the hierarchy is only 1-level, there is no inclusion/exclusion requirement.

| |

| − | * This protocol does not differentiate between loads and stores.

| |

| − | | |

| − | ====== Related Files ======

| |

| − | | |

| − | * '''src/mem/protocols'''

| |

| − | ** '''MI_example-cache.sm''': cache controller specification

| |

| − | ** '''MI_example-dir.sm''': directory controller specification

| |

| − | ** '''MI_example-dma.sm''': dma controller specification

| |

| − | ** '''MI_example-msg.sm''': message type specification

| |

| − | ** '''MI_example.slicc''': container file

| |

| − | | |

| − | ====== Stable States and Invariants ======

| |

| − | | |

| − | {| border="1" cellpadding="10" class="wikitable"

| |

| − | ! States !! Invariants

| |

| − | |-

| |

| − | | '''M''' || The cache block has been accessed (read/written) by this node. No other node holds a copy of the cache block

| |

| − | |-

| |

| − | | '''I''' || The cache block at this node is invalid

| |

| − | |}

| |

| − | | |

| − | '''The notation used in the controller FSM diagrams is described [[#Coherence_controller_FSM_Diagrams|here]].'''

| |

| − | | |

| − | ====== Cache controller ======

| |

| − | | |

| − | * Requests, Responses, Triggers:

| |

| − | ** Load, Instruction fetch, Store from the core

| |

| − | ** Replacement from self

| |

| − | ** Data from the directory controller

| |

| − | ** Forwarded request (intervention) from the directory controller

| |

| − | ** Writeback acknowledgement from the directory controller

| |

| − | ** Invalidations from directory controller (on dma activity)

| |

| − | | |

| − | [[File:MI_example_cache_FSM.jpg|450px|right]]

| |

| − | | |

| − | * Main Operation:

| |

| − | ** On a '''load/Instruction fetch/Store''' request from the core:

| |

| − | *** it checks whether the corresponding block is present in the M state. If so, it returns a hit

| |

| − | *** otherwise, if in I state, it initiates a GETX request from the directory controller

| |

| − | | |

| − | ** On a '''replacement''' trigger from self:

| |

| − | *** it evicts the block, issues a writeback request to the directory controller

| |

| − | *** it waits for acknowledgement from the directory controller (to prevent races)

| |

| − | | |

| − | ** On a '''forwarded request''' from the directory controller:

| |

| − | *** This means that the block was in M state at this node when the request was generated by some other node

| |

| − | *** It sends the block directly to the requesting node (cache-to-cache transfer)

| |

| − | *** It evicts the block from this node

| |

| − | | |

| − | ** '''Invalidations''' are similar to replacements

| |

| − | | |

| − | ====== Directory controller ======

| |

| − | | |

| − | * Requests, Responses, Triggers:

| |

| − | ** GETX from the cores, Forwarded GETX to the cores

| |

| − | ** Data from memory, Data to the cores

| |

| − | ** Writeback requests from the cores, Writeback acknowledgements to the cores

| |

| − | ** DMA read, write requests from the DMA controllers

| |

| − | | |

| − | [[File:MI_example_dir_FSM.jpg|450px|right]]

| |

| − | | |

| − | * Main Operation:

| |

| − | ** The directory maintains track of which core has a block in the M state. It designates this core as owner of the block.

| |

| − | ** On a '''GETX''' request from a core:

| |

| − | *** If the block is not present, a memory fetch request is initiated

| |

| − | *** If the block is already present, then it means the request is generated from some other core

| |

| − | **** In this case, a forwarded request is sent to the original owner

| |

| − | **** Ownership of the block is transferred to the requestor

| |

| − | ** On a '''writeback''' request from a core:

| |

| − | *** If the core is owner, the data is written to memory and acknowledgement is sent back to the core

| |

| − | *** If the core is not owner, a NACK is sent back

| |

| − | **** This can happen in a race condition

| |

| − | **** The core evicted the block while a forwarded request some other core was on the way and the directory has already changed ownership for the core

| |

| − | **** The evicting core holds the data till the forwarded request arrives

| |

| − | ** On '''DMA''' accesses (read/write)

| |

| − | *** Invalidation is sent to the owner node (if any). Otherwise data is fetched from memory.

| |

| − | *** This ensures that the most recent data is available.

| |

| − | | |

| − | ====== Other features ======

| |

| − | | |

| − | ** MI protocols don't support LL/SC semantics. A load from a remote core will invalidate the cache block.

| |

| − | ** This protocol has no timeout mechanisms.

| |

| | | | |

| − | ===== MOESI_hammer =====

| + | # '''[[MI_example]]''': example protocol, 1-level cache. |

| | + | # '''[[MESI_Two_Level]]''': single chip, 2-level caches, strictly-inclusive hierarchy. |

| | + | # '''[[MOESI_CMP_directory]]''': multiple chips, 2-level caches, non-inclusive (neither strictly inclusive nor exclusive) hierarchy. |

| | + | # '''[[MOESI_CMP_token]]''': 2-level caches. TODO. |

| | + | # '''[[MOESI_hammer]]''': single chip, 2-level private caches, strictly-exclusive hierarchy. |

| | + | # '''[[Garnet_standalone]]''': protocol to run the Garnet network in a standalone manner. |

| | + | # '''[[MESI Three Level]]''': 3-level caches, strictly-inclusive hierarchy. |

| | | | |

| − | This is an implementation of AMD's Hammer protocol, which is used in AMD's Hammer chip (also know as the Opteron or Athlon 64).

| + | Commonly used notations and data structures in the protocols have been described in detail [[Cache Coherence Protocols|here]]. |

| | | | |

| − | ====== Related Files ====== | + | === Protocol independent memory components === |

| | | | |

| − | * '''src/mem/protocols'''

| + | # '''Sequencer''' |

| − | ** '''MOESI_hammer-cache.sm''': cache controller specification

| + | # '''Cache Memory''' |

| − | ** '''MOESI_hammer-dir.sm''': directory controller specification

| + | # '''Replacement Policies''' |

| − | ** '''MOESI_hammer-dma.sm''': dma controller specification

| + | # '''Memory Controller''' |

| − | ** '''MOESI_hammer-msg.sm''': message type specification

| |

| − | ** '''MOESI_hammer.slicc''': container file

| |

| | | | |

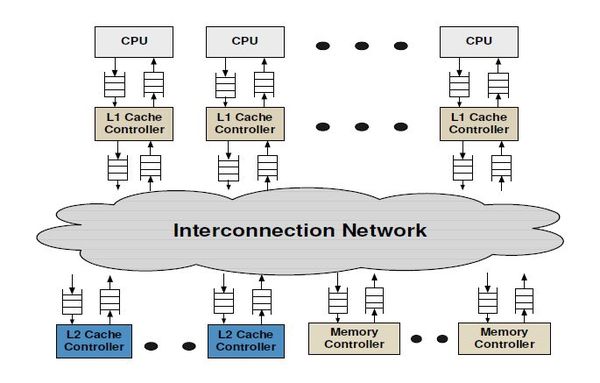

| − | ====== Cache Hierarchy ======

| + | In general cache coherence protocol independent components comprises of the Sequencer, Cache Memory structure, [[Replacement_policy|replacement policies]] and the Memory controller. The Sequencer class is responsible for feeding the memory subsystem (including the caches and the off-chip memory) with load/store/atomic memory requests from the processor. Every memory request when completed by the memory subsystem also send back the response to the processor via the Sequencer. There is one Sequencer for each hardware thread (or core) simulated in the system. The Cache Memory models a set-associative cache structure with parameterizable size, associativity, and replacement policy. L1, L2, L3 caches in the system are instances of Cache Memory, if they exist. The replacement policies are kept modular from the Cache Memory, so that different instances of Cache Memory can use different replacement policies of their choice. The Memory Controller is responsible for simulating and servicing any request that misses on all the on-chip caches of the simulated system. Memory Controller currently simple, but models DRAM ban contention, DRAM refresh faithfully. It also models close-page policy for DRAM buffer. |

| | | | |

| − | This protocol implements a 2-level private cache hierarchy. It assigns separate Instruction and Data L1 caches, and a unified L2 cache to each core. These caches are private to each core and are controlled with one shared cache controller. This protocol enforce exclusion between L1 and L2 caches.

| + | '''''Each component is described in details [[Coherence-Protocol-Independent Memory Components|here]].''''' |

| | | | |

| − | ====== Stable States and Invariants ====== | + | === Interconnection Network === |

| | | | |

| − | {| border="1" cellpadding="10" class="wikitable"

| + | The interconnection network connects the various components of the memory hierarchy (cache, memory, dma controllers) together. |

| − | ! States !! Invariants

| |

| − | |-

| |

| − | | '''MM''' || The cache block is held exclusively by this node and is potentially locally modified (similar to conventional "M" state).

| |

| − | |-

| |

| − | | '''O''' || The cache block is owned by this node. It has not been modified by this node. No other node holds this block in exclusive mode, but sharers potentially exist.

| |

| − | |-

| |

| − | | '''M''' || The cache block is held in exclusive mode, but not written to (similar to conventional "E" state). No other node holds a copy of this block. Stores are not allowed in this state.

| |

| − | |-

| |

| − | | '''S''' || The cache line holds the most recent, correct copy of the data. Other processors in the system may hold copies of the data in the shared state, as well. The cache line can be read, but not written in this state.

| |

| − | |-

| |

| − | | '''I''' || The cache line is invalid and does not hold a valid copy of the data.

| |

| − | |}

| |

| | | | |

| − | ====== Cache controller ======

| + | [[File:Interconnection_network.jpg|600px|center]] |

| | | | |

| | + | The key components of an interconnection network are: |

| | | | |

| − | '''The notation used in the controller FSM diagrams is described [[#Coherence_controller_FSM_Diagrams|here]].''' | + | # '''Topology''' |

| | + | # '''Routing''' |

| | + | # '''Flow Control''' |

| | + | # '''Router Microarchitecture''' |

| | | | |

| − | MOESI_hammer supports cache flushing. To flush a cache line, the cache controller first issues a GETF request to the directory to block the line until the flushing is completed. It then issues a PUTF and writes back the cache line.

| + | '''''More details about the network model implementation are described [[Interconnection Network|here]].''''' |

| | | | |

| − | [[File:MOESI_hammer_cache_FSM.jpg|center]] | + | Alternatively, Interconnection network could be replaced with the external simulator [http://www.atc.unican.es/topaz/ TOPAZ]. This simulator is ready to run within gem5 and adds a significant number of [https://sites.google.com/site/atcgalerna/home-1/publications/files/NOCS-2012_Topaz.pdf?attredirects=0 features] over original ruby network simulator. It includes, new advanced router micro-architectures, new topologies, precision-performance adjustable router models, mechanisms to speed-up network simulation, etc ... The presentation of the tool (and the reason why is not included in the gem5 repostories) is [http://thread.gmane.org/gmane.comp.emulators.m5.users/9651 here] |

| | | | |

| − | ====== Directory controller ====== | + | == Life of a memory request in Ruby == |

| | | | |

| − | MOESI_hammer memory module, unlike a typical directory protocol, does not contain any directory state and instead broadcasts requests to all the processors in the system. In parallel, it fetches the data from the DRAM and forward the response to the requesters.

| |

| − |

| |

| − | probe filter: TODO

| |

| − |

| |

| − | * '''Stable States and Invariants'''

| |

| − |

| |

| − | {| border="1" cellpadding="10" class="wikitable"

| |

| − | ! States !! Invariants

| |

| − | |-

| |

| − | | '''NX''' || Not Owner, probe filter entry exists, block in O at Owner.

| |

| − | |-

| |

| − | | '''NO''' || Not Owner, probe filter entry exists, block in E/M at Owner.

| |

| − | |-

| |

| − | | '''S''' || Data clean, probe filter entry exists pointing to the current owner.

| |

| − | |-

| |

| − | | '''O''' || Data clean, probe filter entry exists.

| |

| − | |-

| |

| − | | '''E''' || Exclusive Owner, no probe filter entry.

| |

| − | |}

| |

| − |

| |

| − | * '''Controller'''

| |

| − |

| |

| − |

| |

| − | '''The notation used in the controller FSM diagrams is described [[#Coherence_controller_FSM_Diagrams|here]].'''

| |

| − |

| |

| − | [[File:MOESI_hammer_dir_FSM.jpg|center]]

| |

| − |

| |

| − | ===== MOESI_CMP_token =====

| |

| − | This protocol is an extension of MOESI_CMP_directory and it maintains coherence permission by explicitly exchanging and counting tokens.

| |

| − |

| |

| − | ====== Protocol Overview ======

| |

| − |

| |

| − | ====== Related Files ======

| |

| − |

| |

| − | * '''src/mem/protocols'''

| |

| − | ** '''MOESI_CMP_token-L1cache.sm''': L1 cache controller specification

| |

| − | ** '''MOESI_CMP_token-L2cache.sm''': L2 cache controller specification

| |

| − | ** '''MOESI_CMP_token-dir.sm''': directory controller specification

| |

| − | ** '''MOESI_CMP_token-dma.sm''': dma controller specification

| |

| − | ** '''MOESI_CMP_token-msg.sm''': message type specification

| |

| − | ** '''MOESI_CMP_token.slicc''': container file

| |

| − |

| |

| − | ====== Controller Description ======

| |

| − |

| |

| − | * '''L1 Cache'''

| |

| − |

| |

| − | {| border="1" cellpadding="10" class="wikitable"

| |

| − | ! States !! Invariants

| |

| − | |-

| |

| − | | '''MM''' || The cache block is held exclusively by this node and is potentially modified (similar to conventional "M" state).

| |

| − | |-

| |

| − | | '''MM_W''' || The cache block is held exclusively by this node and is potentially modified (similar to conventional "M" state). Replacements and DMA accesses are not allowed in this state. The block automatically transitions to MM state after a timeout.

| |

| − | |-

| |

| − | | '''O''' || The cache block is owned by this node. It has not been modified by this node. No other node holds this block in exclusive mode, but sharers potentially exist.

| |

| − | |-

| |

| − | | '''M''' || The cache block is held in exclusive mode, but not written to (similar to conventional "E" state). No other node holds a copy of this block. Stores are not allowed in this state.

| |

| − | |-

| |

| − | | '''M_W''' || The cache block is held in exclusive mode, but not written to (similar to conventional "E" state). No other node holds a copy of this block. Only loads and stores are allowed. Silent upgrade happens to MM_W state on store. Replacements and DMA accesses are not allowed in this state. The block automatically transitions to M state after a timeout.

| |

| − | |-

| |

| − | | '''S''' || The cache block is held in shared state by 1 or more nodes. Stores are not allowed in this state.

| |

| − | |-

| |

| − | | '''I''' || The cache block is invalid.

| |

| − | |}

| |

| − |

| |

| − | *'''L2 cache'''

| |

| − |

| |

| − | {| border="1" cellpadding="10" class="wikitable"

| |

| − | ! States !! Invariants

| |

| − | |-

| |

| − | | '''NP''' || The cache block is held exclusively by this node and is potentially locally modified (similar to conventional "M" state).

| |

| − | |-

| |

| − | | '''O''' || The cache block is owned by this node. It has not been modified by this node. No other node holds this block in exclusive mode, but sharers potentially exist.

| |

| − | |-

| |

| − | | '''M''' || The cache block is held in exclusive mode, but not written to (similar to conventional "E" state). No other node holds a copy of this block. Stores are not allowed in this state.

| |

| − | |-

| |

| − | | '''S''' || The cache line holds the most recent, correct copy of the data. Other processors in the system may hold copies of the data in the shared state, as well. The cache line can be read, but not written in this state.

| |

| − | |-

| |

| − | | '''I''' || The cache line is invalid and does not hold a valid copy of the data.

| |

| − | |}

| |

| − |

| |

| − | * '''Directory controller'''

| |

| − |

| |

| − | {| border="1" cellpadding="10" class="wikitable"

| |

| − | ! States !! Invariants

| |

| − | |-

| |

| − | | '''O''' || Owner, .

| |

| − | |-

| |

| − | | '''NO''' || Not Owner, block in E/M at Owner.

| |

| − | |-

| |

| − | | '''L''' || Locked, to the current owner.

| |

| − |

| |

| − | |}

| |

| − |

| |

| − | ===== MOESI_CMP_directory =====

| |

| − |

| |

| − | ====== Protocol Overview ======

| |

| − |

| |

| − | TODO: Cache Hierarchy

| |

| − |

| |

| − | In contrast with the MESI protocol, the MOESI protocol introduces an additional '''Owned''' state.

| |

| − |

| |

| − | ====== Related Files ======

| |

| − |

| |

| − | * '''src/mem/protocols'''

| |

| − | ** '''MOESI_CMP_directory-L1cache.sm''': L1 cache controller specification

| |

| − | ** '''MOESI_CMP_directory-L2cache.sm''': L2 cache controller specification

| |

| − | ** '''MOESI_CMP_directory-dir.sm''': directory controller specification

| |

| − | ** '''MOESI_CMP_directory-dma.sm''': dma controller specification

| |

| − | ** '''MOESI_CMP_directory-msg.sm''': message type specification

| |

| − | ** '''MOESI_CMP_directory.slicc''': container file

| |

| − |

| |

| − | ====== L1 Cache ======

| |

| − |

| |

| − | * '''Stable States and Invariants'''

| |

| − |

| |

| − | {| border="1" cellpadding="10" class="wikitable"

| |

| − | ! States !! Invariants

| |

| − | |-

| |

| − | | '''MM''' || The cache block is held exclusively by this node and is potentially modified (similar to conventional "M" state).

| |

| − | |-

| |

| − | | '''MM_W''' || The cache block is held exclusively by this node and is potentially modified (similar to conventional "M" state). Replacements and DMA accesses are not allowed in this state. The block automatically transitions to MM state after a timeout.

| |

| − | |-

| |

| − | | '''O''' || The cache block is owned by this node. It has not been modified by this node. No other node holds this block in exclusive mode, but sharers potentially exist.

| |

| − | |-

| |

| − | | '''M''' || The cache block is held in exclusive mode, but not written to (similar to conventional "E" state). No other node holds a copy of this block. Stores are not allowed in this state.

| |

| − | |-

| |

| − | | '''M_W''' || The cache block is held in exclusive mode, but not written to (similar to conventional "E" state). No other node holds a copy of this block. Only loads and stores are allowed. Silent upgrade happens to MM_W state on store. Replacements and DMA accesses are not allowed in this state. The block automatically transitions to M state after a timeout.

| |

| − | |-

| |

| − | | '''S''' || The cache block is held in shared state by 1 or more nodes. Stores are not allowed in this state.

| |

| − | |-

| |

| − | | '''I''' || The cache block is invalid.

| |

| − | |}

| |

| − |

| |

| − | * '''Controller'''

| |

| − |

| |

| − | '''The notation used in the controller FSM diagrams is described [[#Coherence_controller_FSM_Diagrams|here]].'''

| |

| − |

| |

| − | [[File:MOESI_CMP_directory_L1cache_FSM.jpg|center]]

| |

| − |

| |

| − | ** '''Optimizations'''

| |

| − |

| |

| − | {| border="1" cellpadding="10" class="wikitable"

| |

| − | ! States !! Description

| |

| − | |-

| |

| − | | '''SM''' || A GETX has been issued to get exclusive permissions for an impending store to the cache block, but an old copy of the block is still present. Stores and Replacements are not allowed in this state.

| |

| − | |-

| |

| − | | '''OM''' || A GETX has been issued to get exclusive permissions for an impending store to the cache block, the data has been received, but all expected acknowledgments have not yet arrived. Stores and Replacements are not allowed in this state.

| |

| − | |}

| |

| − |

| |

| − | '''The notation used in the controller FSM diagrams is described [[#Coherence_controller_FSM_Diagrams|here]].'''

| |

| − |

| |

| − | [[File:MOESI_CMP_directory_L1cache_optim_FSM.jpg|center]]

| |

| − |

| |

| − | ====== L2 Cache ======

| |

| − |

| |

| − | * '''Stable States and Invariants'''

| |

| − |

| |

| − | {| border="1" cellpadding="10" class="wikitable"

| |

| − | ! States !! Invariants

| |

| − | |-

| |

| − | | '''NP/I''' || The cache block at this chip is invalid.

| |

| − | |-

| |

| − | | '''ILS''' || The cache block is shared locally by L1 nodes in this chip.

| |

| − | |-

| |

| − | | '''ILX''' || The cache block is held in exclusive mode by some L1 node in this chip.

| |

| − | |-

| |

| − | | '''ILO''' || Some L1 node in this chip is an owner of this cache block.

| |

| − | |-

| |

| − | | '''ILOX''' || The cache block is held exclusively by this chip and some L1 node in this chip is an owner of the block.

| |

| − | |-

| |

| − | | '''ILOS''' || Some L1 node in this chip is an owner of this cache block. There are also potential L1 sharers of this cache block in this chip.

| |

| − | |-

| |

| − | | '''ILOSX''' || The cache block is held exclusively by this chip. Some L1 node in this chip is an owner of the block. There are also potential L1 sharers of this cache block in this chip.

| |

| − | |-

| |

| − | | '''S''' || The cache block is potentially shared across chips, but at this chip there are no local L1 sharers.

| |

| − | |-

| |

| − | | '''O''' || The cache block is owned by this chip. But there are no local L1 sharers of this block.

| |

| − | |-

| |

| − | | '''OLS''' || TODO

| |

| − | |-

| |

| − | | '''OLSX''' || TODO

| |

| − | |-

| |

| − | | '''SLS''' || TODO

| |

| − | |-

| |

| − | | '''M''' || TODO

| |

| − | |}

| |

| − |

| |

| − | * '''Controller'''

| |

| − |

| |

| − | ====== Directory ======

| |

| − |

| |

| − | * '''Stable States and Invariants'''

| |

| − |

| |

| − | {| border="1" cellpadding="10" class="wikitable"

| |

| − | ! States !! Invariants

| |

| − | |-

| |

| − | | '''M''' || The cache block is held in exclusive state by only 1 node (which is also the owner). There are no sharers of this block. The data is potentially different from that in memory.

| |

| − | |-

| |

| − | | '''O''' || The cache block is owned by exactly 1 node. There may be sharers of this block. The data is potentially different from that in memory.

| |

| − | |-

| |

| − | | '''S''' || The cache block is held in shared state by 1 or more nodes. No node has ownership of the block. The data is consistent with that in memory (Check).

| |

| − | |-

| |

| − | | '''I''' || The cache block is invalid.

| |

| − | |}

| |

| − |

| |

| − | * '''Controller'''

| |

| − |

| |

| − | '''The notation used in the controller FSM diagrams is described [[#Coherence_controller_FSM_Diagrams|here]].'''

| |

| − |

| |

| − | [[File:MOESI_CMP_directory_dir_FSM.jpg|center]]

| |

| − |

| |

| − | ====== Other features ======

| |

| − |

| |

| − | * '''Timeouts''':

| |

| − |

| |

| − | ''Rathijit will do it''

| |

| − |

| |

| − | ===== MESI_CMP_directory =====

| |

| − |

| |

| − | ====== '''Protocol Overview''' ======

| |

| − |

| |

| − | * This protocol models '''two-level cache hierarchy'''. The L1 cache is private to a core, while the L2 cache is shared among the cores. L1 Cache is split into Instruction and Data cache.

| |

| − | * '''Inclusion''' is maintained between the L1 and L2 cache.

| |

| − | * At high level the protocol has four stable states, '''M''', '''E''', '''S''' and '''I'''. A block in '''M''' state means the blocks is writable (i.e. has exclusive permission) and has been dirtied (i.e. its the only valid copy on-chip). '''E''' state represent a cache block with exclusive permission (i.e. writable) but is not written yet. '''S''' state means the cache block is only readable and possible multiple copies of it exists in multiple private cache and as well as in the shared cache. '''I''' means that the cache block is invalid.

| |

| − | * The on-chip cache coherence is maintained through '''Directory Coherence''' scheme, where the directory information is co-located with the corresponding cache blocks in the shared L2 cache.

| |

| − | * The protocol has four types of controllers -- '''L1 cache controller, L2 cache controller, Directory controller''' and '''DMA controller'''. L1 cache controller is responsible for managing L1 Instruction and L1 Data Cache. Number of instantiation of L1 cache controller is equal to the number of cores in the simulated system. L2 cache controller is responsible for managing the shared L2 cache and for maintaining coherence of on-chip data through directory coherence scheme. The Directory controller act as interface to the Memory Controller/Off-chip main memory and also responsible for coherence across multiple chips/and external coherence request from DMA controller. DMA controller is responsible for satisfying coherent DMA requests.

| |

| − | * One of the primary optimization in this protocol is that if a L1 Cache request a data block even for read permission, the L2 cache controller if finds that no other core has the block, it returns the cache block with exclusive permission. This is an optimization done in anticipation that a cache blocks read would be written by the same core soon and thus save an extra request with this optimization. This is exactly why '''E''' state exits (i.e. when a cache block is writable but not yet written).

| |

| − | * The protocol supports ''silent eviction'' of ''clean'' cache blocks from the private L1 caches. This means that cache blocks which have not been written to and has readable permission only can drop the cache block from the private L1 cache without informing the L2 cache. This optimization helps reducing write-back traffic to the L2 cache controller.

| |

| − |

| |

| − | ====== '''Related Files''' ======

| |

| − |

| |

| − | * '''src/mem/protocols'''

| |

| − | ** '''MESI_CMP_directory-L1cache.sm''': L1 cache controller specification

| |

| − | ** '''MESI_CMP_directory-L2cache.sm''': L2 cache controller specification

| |

| − | ** '''MESI_CMP_directory-dir.sm''': directory controller specification

| |

| − | ** '''MESI_CMP_directory-dma.sm''': dma controller specification

| |

| − | ** '''MESI_CMP_directory-msg.sm''': coherence message type specifications. This defines different field of different type of messages that would be used by the given protocol

| |

| − | ** '''MESI_CMP_directory.slicc''': container file

| |

| − |

| |

| − | ====== '''Controller Description''' ======

| |

| − |

| |

| − | * '''L1 cache controller'''

| |

| − |

| |

| − | {| border="1" cellpadding="10" class="wikitable"

| |

| − | ! States !! Invariants and Semantic of the state

| |

| − | |-

| |

| − | | '''M''' || The cache block is held in exclusive state by '''only one L1 cache'''. There are no sharers of this block. The data is potentially is the only valid copy in the system. The copy of the cache block is '''writable''' and as well as '''readable'''.

| |

| − | |-

| |

| − | | '''E''' || The cache block is held with exclusive permission by exactly '''only one L1 cache'''. The difference with the '''M''' state is that the cache block is writable (and readable) but not yet written.

| |

| − | |-

| |

| − | | '''S''' || The cache block is held in shared state by 1 or more L1 caches and/or by the L2 cache. The block is only '''readable'''. No cache can have the cache block with exclusive permission.

| |

| − | |-

| |

| − | | '''I / NP''' || The cache block is invalid.

| |

| − | |-

| |

| − | | '''IS''' || Its a transient state. This means that '''GETS (Read)''' request has been issued for the cache block and awaiting for response. The cache block is neither readable nor writable.

| |

| − | |-

| |

| − | | '''IM''' || Its a transient state. This means that '''GETX (Write)''' request has been issued for the cache block and awaiting for response. The cache block is neither readable nor writable.

| |

| − | |-

| |

| − | | '''SM''' || Its a transient state. This means the cache block was originally in S state and then '''UPGRADE (Write)''' request was issued to get exclusive permission for the blocks and awaiting response. The cache block is '''readable'''.

| |

| − | |-

| |

| − | | '''IS_I''' || Its a transient state. This means that while in IS state the cache controller received Invalidation from the L2 Cache's directory. This happens due to race condition due to write to the same cache block by other core, while the given core was trying to get the same cache blocks for reading. The cache block is neither readable nor writable..

| |

| − | |-

| |

| − | | '''M_I''' || Its a transient state. This state indicates that the cache is trying to replace a cache block in '''M''' state from its cache and the write-back (PUTX) to the L2 cache's directory has been issued but awaiting write-back acknowledgement.

| |

| − | |-

| |

| − | | '''SINK_WB_ACK''' || Its a transient state. This state is reached when waiting for write-back acknowledgement from the L2 cache's directory, the L1 cache received intervention (forwarded request from other cores). This indicates a race between the issued write-back to the directory and another request from the another cache has happened. This also indicates that the write-back has lost the race (i.e. before it reached the L2 cache's directory, another core's request has reached the L2). This state is essential to avoid possibility of complicated race condition that can happen if write-backs are silently dropped at the directory.

| |

| − | |-

| |

| − |

| |

| − | |}

| |

| − |

| |

| − | * '''L2 cache controller'''

| |

| − |

| |

| − | Recall that the on-chip directory is co-located with the corresponding cache blocks in the L2 Cache. Thus following states in the L2 cache block encodes the information about the status and permissions of the cache blocks in the L2 cache as well as the coherence status of the cache block that may be present in one or more private L1 caches. Beyond the coherence states there are also two more important fields per cache block that aids to make proper coherence actions. These fields are '''Sharers''' field, which can be thought of as a bit-vector indicating which of the private L1 caches potentially have the given cache block. The other important field is the '''Owner''' field, which is the identity of the private L1 cache in case the cache block is held with exclusive permission in a L1 cache.

| |

| − |

| |

| − | {| border="1" cellpadding="10" class="wikitable"

| |

| − | ! States !! Invariants and Semantic of the state

| |

| − | |-

| |

| − | | '''NP''' || The cache blocks is not present in the on-chip cache hierarchy.

| |

| − | |-

| |

| − | | '''SS''' || The cache block is present in potentially multiple private caches in only readable mode (i.e.in "S" state in private caches). Corresponding "Sharers" vector with the block should give the identity of the private caches which possibly have the cache block in its cache. The cache block in the L2 cache is valid and '''readable'''.

| |

| − | |-

| |

| − | | '''M''' || The cache block is present ONLY in the L2 cache and has exclusive permission. L1 Cache's read/write requests (GETS/GETX) can be satisfied directly from the L2 cache.

| |

| − | |-

| |

| − | | '''MT''' || The cache block is in ONE of the private L1 caches with exclusive permission. The data in the L2 cache is potentially stale. The identity of the L1 cache which has the block can be found in the "Owner" field associated with the cache block. Any request for read/write (GETS/GETX) from other cores/private L1 caches need to be forwarded to the owner of the cache block. L2 can not service requests itself.

| |

| − | |-

| |

| − | | '''M_I''' || Its a transient state. This state indicates that the cache is trying to replace the cache block from its cache and the write-back (PUTX/PUTS) to the Directory controller (which act as interface to Main memory) has been issued but awaiting write-back acknowledgement. The data is neither readable nor writable.

| |

| − | |-

| |

| − | | '''MT_I''' || Its a transient state. This state indicates that the cache is trying to replace a cache block in '''MT''' state from its cache. Invalidation to the current owner (private L1 cache) of the cache block has been issued and awaiting write-back from the Owner L1 cache. Note that the this Invalidation (called back-invalidation) is instrumental in making sure that the inclusion is maintained between L1 and L2 caches. The data is neither readable nor writable.

| |

| − | |-

| |

| − | | '''MCT_I''' || Its a transient state.This state is same as '''MT_I''', except that it is known that the data in the L2 cache is in ''clean'' state. The data is neither readable nor writable.

| |

| − | |-

| |

| − | | '''I_I''' || Its a transient state. The L2 cache is trying to replace a cache block in the '''SS''' state and the cache block in the L2 is in ''clean'' state. Invalidations has been sent to all potential sharers (L1 caches) of the cache block. The L2 cache's directory is waiting for all the required Acknowledgements to arrive from the L1 caches. Note that the this Invalidation (called back-invalidation) is instrumental in making sure that the inclusion is maintained between L1 and L2 caches. The data is neither readable nor writable.

| |

| − | |-

| |

| − | | '''S_I''' || Its a transient state.Same as '''I_I''', except the data in L2 cache for the cache block is ''dirty''. This means unlike in the case of '''I_I''', the data needs to be sent to the Main memory. The cache block is neither readable nor writable..

| |

| − | |-

| |

| − | | '''ISS''' || Its a transient state. L2 has received a '''GETS (read)''' request from one of the private L1 caches, for a cache block that it not present in the on-chip caches. A read request has been sent to the Main Memory (Directory controller) and waiting for the response from the memory. This state is reached only when the request is for data cache block (not instruction cache block). The purpose of this state is that if it is found that only one L1 cache has requested the cache block then the block is returned to the requester with exclusive permission (although it was requested for reading permission). The cache block is neither readable nor writable.

| |

| − | |-

| |

| − | | '''IS''' || Its a transient state. The state is similar to '''ISS''', except the fact that if the requested cache block is Instruction cache block or more than one core request the same cache block while waiting for the response from the memory, this state is reached instead of '''ISS'''. Once the requested cache block arrives from the Main Memory, the block is sent to the requester(s) with read-only permission. The cache block is neither readable nor writable at this state.

| |

| − | |-

| |

| − | | '''IM''' || Its a transient state. This state is reached when a L1 GETX (write) request is received by the L2 cache for a cache blocks that is not present in the on-chip cache hierarchy. The request for the cache block in exclusive mode has been issued to the main memory but response is yet to arrive.The cache block is neither readable nor writable at this state.

| |

| − | |-

| |

| − | | '''SS_MB''' || Its a transient state. In general any state whose name ends with "B" (like this one) also means that it is a ''blocking'' coherence state. This means the directory awaiting for some response from the private L1 cache ans until it receives the desired response any other request is not entertained (i.e. request are effectively serialized). This particular state is reached when a L1 cache requests a cache block with exclusive permission (i.e. GETX or UPGRADE) and the coherence state of the cache blocks was in '''SS''' state. This means that the requested cache blocks potentially has readable copies in the private L1 caches. Thus before giving the exclusive permission to the requester, all the readable copies in the L1 caches need to be invalidated. This state indicate that the required invalidations has been sent to the potential sharers (L1 caches) and the requester has been informed about the required number of Invalidation Acknowledgement it needs before it can have the exclusive permission for the cache block. Once the requester L1 cache gets the required number of Invalidation Acknowledgement it informs the director about this by ''UNBLOCK'' message which allows the directory to move out of this blocking coherence state and thereafter it can resume entertaining other request for the given cache block. The cache block is neither readable nor writable at this state.

| |

| − | |-

| |

| − | | '''MT_MB''' || Its a transient state and also a ''blocking'' state. This state is reached when L2 cache's directory has sent out a cache block with exclusive permission to a requester L1 cache but yet to receive ''UNBLOCK'' from the requester L1 cache acknowledging the receipt of exclusive permission. The cache block is neither readable nor writable at this state.

| |

| − | |-

| |

| − | | '''MT_IIB''' || Its a transient state and also a ''blocking'' state. This state is reached when a read request (GETS) request is received for a cache blocks which is currently held with exclusive permission in another private L1 cache (i.e. directory state is '''MT'''). On such requests the L2 cache's directory forwards the request to the current owner L1 cache and transitions to this state. Two events need to happen before this cache block can be unblocked (and thus start entertaining further request for this cache block). The current owner cache block need to send a write-back to the L2 cache to update the L2's copy with latest value. The requester L1 cache also needs to send ''UNBLOCK'' to the L2 cache indicating that it has got the requested cache block with desired coherence permissions. The cache block is neither readable nor writable at this state in the L2 cache.

| |

| − | |-

| |

| − | | '''MT_IB''' || Its a transient state and also a ''blocking'' state. This state is reached when at '''MT_IIB''' state the L2 cache controller receives the ''UNBLOCK'' from the requester L1 cache but yet to receive the write-back from the previous owner L1 cache of the block. The cache block is neither readable nor writable at this state in the L2 cache.

| |

| − | |-

| |

| − | | '''MT_IB''' || Its a transient state and also a ''blocking'' state. This state is reached when at '''MT_IIB''' state the L2 cache controller receives write-back from the previous owner L1 cache for the blocks, while yet to receive the ''UNBLOCK'' from the current requester for the cache block. The cache block is neither readable nor writable at this state in the L2 cache.

| |

| − | |}

| |

| − |

| |

| − | ===== Network_test =====

| |

| − | This is a dummy cache coherence protocol that is used to operate the ruby network tester. The details about running the network tester can be found [[networktest|here]].

| |

| − |

| |

| − | ====== Related Files ======

| |

| − |

| |

| − | * '''src/mem/protocols'''

| |

| − | ** '''Network_test-cache.sm''': cache controller specification

| |

| − | ** '''Network_test-dir.sm''': directory controller specification

| |

| − | ** '''Network_test-msg.sm''': message type specification

| |

| − | ** '''Network_test.slicc''': container file

| |

| − |

| |

| − | ====== Cache Hierarchy ======

| |

| − |

| |

| − | This protocol assumes a 1-level cache hierarchy. The role of the cache is to simply send messages from the cpu to the appropriate directory (based on the address), in the appropriate virtual network (based on the message type). It does not track any state. Infact, no CacheMemory is created unlike other protocols. The directory receives the messages from the caches, but does not send any back. The goal of this protocol is to enable simulation/testing of just the interconnection network.

| |

| − |

| |

| − | ====== Stable States and Invariants ======

| |

| − |

| |

| − | {| border="1" cellpadding="10" class="wikitable"

| |

| − | ! States !! Invariants

| |

| − | |-

| |

| − | | '''I''' || Default state of all cache blocks

| |

| − | |}

| |

| − |

| |

| − | ====== Cache controller ======

| |

| − |

| |

| − | * Requests, Responses, Triggers:

| |

| − | ** Load, Instruction fetch, Store from the core.

| |

| − | The network tester (in src/cpu/testers/networktest/networktest.cc) generates packets of the type '''ReadReq''', '''INST_FETCH''', and '''WriteReq''', which are converted into '''RubyRequestType:LD''', '''RubyRequestType:IFETCH''', and '''RubyRequestType:ST''', respectively, by the RubyPort (in src/mem/ruby/system/RubyPort.hh/cc). These messages reach the cache controller via the Sequencer. The destination for these messages is determined by the traffic type, and embedded in the address. More details can be found [[networktest|here]].

| |

| − |

| |

| − | * Main Operation:

| |

| − | ** The goal of the cache is only to act as a source node in the underlying interconnection network. It does not track any states.

| |

| − | ** On a '''LD''' from the core:

| |

| − | *** it returns a hit, and

| |

| − | *** maps the address to a directory, and issues a message for it of type '''MSG''', and size '''Control''' (8 bytes) in the request vnet (0).

| |

| − | *** Note: vnet 0 could also be made to broadcast, instead of sending a directed message to a particular directory, by uncommenting the appropriate line in the ''a_issueRequest'' action in Network_test-cache.sm

| |

| − | ** On a '''IFETCH''' from the core:

| |

| − | *** it returns a hit, and

| |

| − | *** maps the address to a directory, and issues a message for it of type '''MSG''', and size '''Control''' (8 bytes) in the forward vnet (1).

| |

| − | ** On a '''ST''' from the core:

| |

| − | *** it returns a hit, and

| |

| − | *** maps the address to a directory, and issues a message for it of type '''MSG''', and size '''Data''' (72 bytes) in the response vnet (2).

| |

| − | ** Note: request, forward and response are just used to differentiate the vnets, but do not have any physical significance in this protocol.

| |

| − |

| |

| − | ====== Directory controller ======

| |

| − |

| |

| − | * Requests, Responses, Triggers:

| |

| − | ** '''MSG''' from the cores

| |

| − |

| |

| − | * Main Operation:

| |

| − | ** The goal of the directory is only to act as a destination node in the underlying interconnection network. It does not track any states.

| |

| − | ** The directory simply pops its incoming queue upon receiving the message.

| |

| − |

| |

| − | ====== Other features ======

| |

| − |

| |

| − | ** This protocol assumes only 3 vnets.

| |

| − | ** It should only be used when running the ruby network test.

| |

| − |

| |

| − | ==== Protocol Independent Memory components ====

| |

| − | ===== System =====

| |

| − |

| |

| − | This is a high level container for few of the important components of the Ruby which may need to be accessed from various parts and components of Ruby. Only '''ONE''' instance of this class is created. The instance of this class is globally available through a pointer named '''''g_system_ptr'''''. It holds pointer to the Ruby's profiler object. This allows any component of Ruby to get hold of the profiler and collect statistics in a central location by accessing it though '''''g_system_ptr'''''. It also holds important information about the memory hierarchy like Cache blocks size, Physical memory size and makes them available to all parts of Ruby as and when required. It also holds the pointer to the on-chip network in Ruby. Another important objects that it hold pointer to is the Ruby's wrapper for the simulator event queue (called RubyEventQueue). It also contains pointer to the simulated physical memory (pointed by variable name '''''m_mem_vec_ptr'''''). Thus in sum, System class in Ruby just acts as a container to pointers to some important objects of Ruby's memory system and makes them available globally to all places in Ruby through exposing it self through '''''g_system_ptr'''''.

| |

| − |

| |

| − | ====== Parameters ======

| |

| − | # '''''random_seed''''' is seed to randomize delays in Ruby. This allows simulating multiple runs with slightly perturbed delays/timings.

| |

| − | # '''''randomization''''' is the parameter when turned on, asks Ruby to randomly delay messages. This falg is useful when stress testing a system to expose corner cases. This flag should NOT be turned on when collecting simulation statistics.

| |

| − | # '''''clock''''' is the parameter for setting the clock frequency (on-chip).

| |

| − | # '''''block_size_bytes''''' specifies the size of cache blocks in bytes.

| |

| − | # '''''mem_size''''' specifies the physical memory size of the simulated system.

| |

| − | # '''''network''''' gives the pointer to the on-chip network for Ruby.

| |

| − | # '''''profiler''''' gives the pointer to the profiler of Ruby.

| |

| − | # '''''tracer''''' is the pointer to the Ruby 's memory request tracer. Tracer is primarily used to playback memory request trace in order to warm up Ruby's caches before actual simulation starts.

| |

| − |

| |

| − | ====== '''Related files''' ======

| |

| − | * '''src/mem/ruby/system'''

| |

| − | ** '''System.hh/cc''': contains code for the System

| |

| − | ** '''RubySystem.py''' :the corresponding python file with parameters.

| |

| − |

| |

| − | ===== Sequencer =====

| |

| − |

| |

| − | '''''Sequencer''''' is one of the most important classes in Ruby, through which every memory request must pass through at least twice -- once before getting serviced by the cache coherence mechanism and once just after being serviced by the cache coherence mechanism. There is one instantiation of Sequencer class for each of the hardware thread being simulated. For example, if we are simulating a 16-core system with each core has single hardware thread context, then there would be 16 Sequencer object in the system, with each being responsible for ''managing'' memory requests (Load, Store, Atomic operations etc) from one of the given hardware thread context. ''ith'' Sequencer object handles request from only ''ith'' hardware context (in case of above example it is ''ith'' core). Each Sequencer assumes that it has access to the L1 Instruction and Data caches that are attached to a given core.

| |

| − |

| |

| − | Following are the primary responsibilities of Sequencer:

| |

| − | # Injecting and accounting for all memory request to the underlying cache hierarchy (and coherence).

| |

| − | # Resource allocation and accounting (e.g. makes sure a particular core/hardware thread does not have more than specified number of outstanding memory requests).

| |

| − | # Making sure atomic operations are handled properly.

| |

| − | # Making sure that underlying cache hierarchy and coherence protocol is making forward progress.

| |

| − | # Once a request is serviced by the underlying cache hierarchy, Sequencer is responsible for returning the result to the corresponding port of the frontend (i.e.M5).

| |

| − |

| |

| − | ====== '''Parameters''' ======

| |

| − | # '''''icache''''' is the parameter where the L1 Instruction cache pointer is passed.

| |

| − | # '''''dcache''''' is the parameter where the L1 Data cache pointer is passed.

| |

| − | # '''''max_outstanding_requests''''' is the parameter for specifying maximum allowed number of outstanding memory request from a given core or hardware thread.

| |

| − | # '''''deadlock_threshold''''' is the parameter that specifies number of cycle (ruby cycles) after which if a given memory request is not satisfied by the cache hierarchy, a possibility of deadlock (or lack of forward progress) is declared.

| |

| − |

| |

| − | ====== '''Related files''' ======

| |

| − | * '''src/mem/ruby/system'''

| |

| − | ** '''Sequencer.hh/cc''': contains code for the Sequencer

| |

| − | ** '''Sequencer.py''' :the corresponding python file with parameters.

| |

| − |

| |

| − | ====== '''More detailed operation description''' ======

| |

| − | In this section, we will describe the operations of Sequencer in more details.

| |

| − | * '''Injection of memory request to cache hierarchy, request accounting and resource allocation:'''

| |

| − |

| |

| − | : The entry point for a memory request to the Sequencer is method called '''''makeRequest'''''. This method is called from the corresponding RubyPort (Ruby's wrapper for M5 port). Once it gets the request, Sequencer checks for whether required resource limitations need to be enforced or not. This is done by calling a method called '''''getRequestStatus'''''. In this method, it is made sure that a given Sequencer (i.e. a core or hardware thread context) can does NOT issue multiple simultaneous requests to same cache block. If the current request indeed for a cache block for which another request is still pending from the same Sequencer then the current request is not issued to the cache hierarchy and instead the current request wait for the previous request from the same cache block to satisfied first. This is done in the code by checking for two requesting accounting table called ''m_writeRequestTable'' and ''m_readRequestTable'' and setting the status to ''RequestSatus_Aliased''. It also makes sure that number of outsatnding memory request from a given Sequencer does not overshoot.

| |

| − | :If it is found that the current request won't violate any of the above constrained then the request is registered for accounting purposes. This is done by calling the method named '''''insertRequest'''''. In this method, depending upon the type of the request, an entry for the request is created in the either ''m_writeRequestTable'' or ''m_readRequestTable''. These two tables keep record for write request and read requests, respectively, that are issued to the cache hierarchy by the given Sequencer but still to be satisfied. Finally the memory request is finally pushed to the cache hierarchy by calling the method named '''''issueRequest'''''. This method is responsible of creating the request structure that is understood by the underlying SLICC generated coherence protocol implementation and cache hierarchy. This is done by setting the request type and mode accordingly and creating an object of class ''RubyRequest'' for the current request. Finally, L1 Instruction or L1 Data cache accesses latencies are accounted for an the request is pushed to the Cache hierarchy and the coherence mechanism for processing. This is done by ''enqueue''-ing the request to the pointer to the mandatory queue (''m_mandatory_q_ptr''). Every request is passed to the corresponding L1 Cache controller through this mandatory queue. Cache hierarchy is then responsible for satisfying the request.

| |

| − |

| |

| − | * ''' Deadlock/lack of forward progress detection: '''

| |

| − | : As mentioned earlier, one other responsibility of the Sequencer is to make sure that Cache hierarchy is making progress in servicing the memory requests that have been issued. This is done by periodically waking up and scanning through the ''m_writeRequestTable'' and ''m_readRequestTable'' tables. which holds the currently outstanding requests from the given Sequencer and finds out which requests have been issued but have not satisfied by the cache hierarchy. If it finds any unsatisfied request that have been issues more than ''m_deadlock_threshold'' (parameter) cycles back, it reports a possible deadlock by the Cache hierarchy. Note that, although it reports possible deadlock, it actually detects lack of forward progress. Thus there may be false positives in this deadlock detection mechanism.

| |

| − |

| |

| − | * ''' Send back result to the front-end and making sure Atomic operations are handled properly:'''

| |

| − | : Once the Cache hierarchy satisfies a request it calls Sequencer's '''''readCallback''''' or ''writeCallback''''' method depending upon the type of the request. Note that the time taken to service the request is automatically accounted for through event scheduling as the '''''readCallback''''' or '''''writeCallback'''' are called only after number of cycles required to satisfy the request has been accounted for. In these two methods the corresponding record of the request from the '' m_readRequestTable'' or ''m_writeRequestTable'' is removed. Also if the request was found to be part of a Atomic operations (e.g. RMW or LL/SC), then appropriate actions are taken to make sure that semantics of atomic operations are respected.

| |

| − | : After that a method called '''''hitCallback''''' is called. In this method, some statics is collected by calling functions on the Ruby's profiler. For write request, the data is actually updated in this function (and not while simulating the request through the cache hierarchy and coherence protocol). Finally '''''ruby_hit_callback''''' is called ultimately sends back the packet to the front-end, signifying completion of the processing of the memory request from Ruby's side.

| |

| − |

| |

| − | ===== CacheMemory and Cache Replacement Polices =====

| |

| − |

| |

| − |

| |

| − | This module can model any '''Set-associative Cache structure''' with a given associativity and size. Each instantiation of the following module models a '''single bank''' of a cache. Thus different types of caches in system (e.g. L1 Instruction, L1 Data , L2 etc) and every banks of a cache needs to have separate instantiation of this module. This module can also model Fully associative cache when the associativity is set to 1. In Ruby memory system, this module is primarily expected to be accessed by the SLICC generated codes of the given Coherence protocol being modeled.

| |

| − | ====== '''Basic Operation''' ======

| |

| − | This module models the set-associative structure as a two dimensional (2D) array. Each row of the 2D array represents a set of in the set-associative cache structure, while columns represents ways. The number of columns is equal to the given associativity (parameter), while the number of rows is decided depending on the desired size of the structure (parameter), associativity (parameter) and the size of the cache line (parameter).

| |

| − | This module exposes six important functionalities which Coherence Protocols uses to manage the caches.

| |

| − | # It allows to query if a given cache line address is present in the set-associative structure being modeled through a function named '''''isTagPresent'''''. This function returns ''true'', iff the given cache line address is present in it.

| |

| − | # It allows a lookup operation which returns the cache entry for a given cache line address (if present), through a function named '''''lookup'''''. It returns NULL if the blocks with given address is not present in the set-associative cache structure.

| |

| − | # It allows to allocate a new cache entry in the set-associative structure through a function named '''''allocate'''''.

| |

| − | # It allows to deallocate a cache entry of a given cache line address through a function named '''''deallocate'''''.

| |

| − | # It can be queried to find out whether to allocate an entry with given cache line address would require replacement of another entry in the designated set (derived from the cache line address) or not. This functionality is provided through '''''cacheAvail''''' function, which for a given cache line address, returns True, if NO replacement of another entry the same set as the given address is required to make space for a new entry with the given address.

| |